The True Nature of LLMs

Are LLM stochastic parrots or is there something deeper in there? In this new post, we dive into the nature of Large Language Models and what it means for use cases beyond conversation and generative text.

Most of us have discovered this new AI wave through ChatGPT and having human-like conversations with a machine. It is therefore not a surprise that we anthropomorphize the technology, associate LLM with the concept of language and knowledge, and expect future iterations to be "more knowledgeable".

In doing so, we are looking at them in a limited way and miss their true nature; thus limiting us in our ability to envision future usage outside the traditional conversational or generative space.

LLMs reasoning capabilities

The true marvel of LLM is not their knowledge but their reasoning capability. By analyzing inputs, and leveraging their internal representation, they can draw inferences, and generate responses that simulate human-like reasoning and planning.

Sebastian Bubeck et al, in the "Sparks of AGI" paper, show through a myriad of examples that GPT4 is more than a text generator. The author writes: "Given the breadth and depth of GPT-4’s capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system".

These capabilities emerge from the combination of their predictive nature and an internal representation in which the model can ground its reasoning: "the only way for the model to do such far-ahead planning is to rely on its internal representations and parameters to solve problems that might require more complex or iterative procedures".

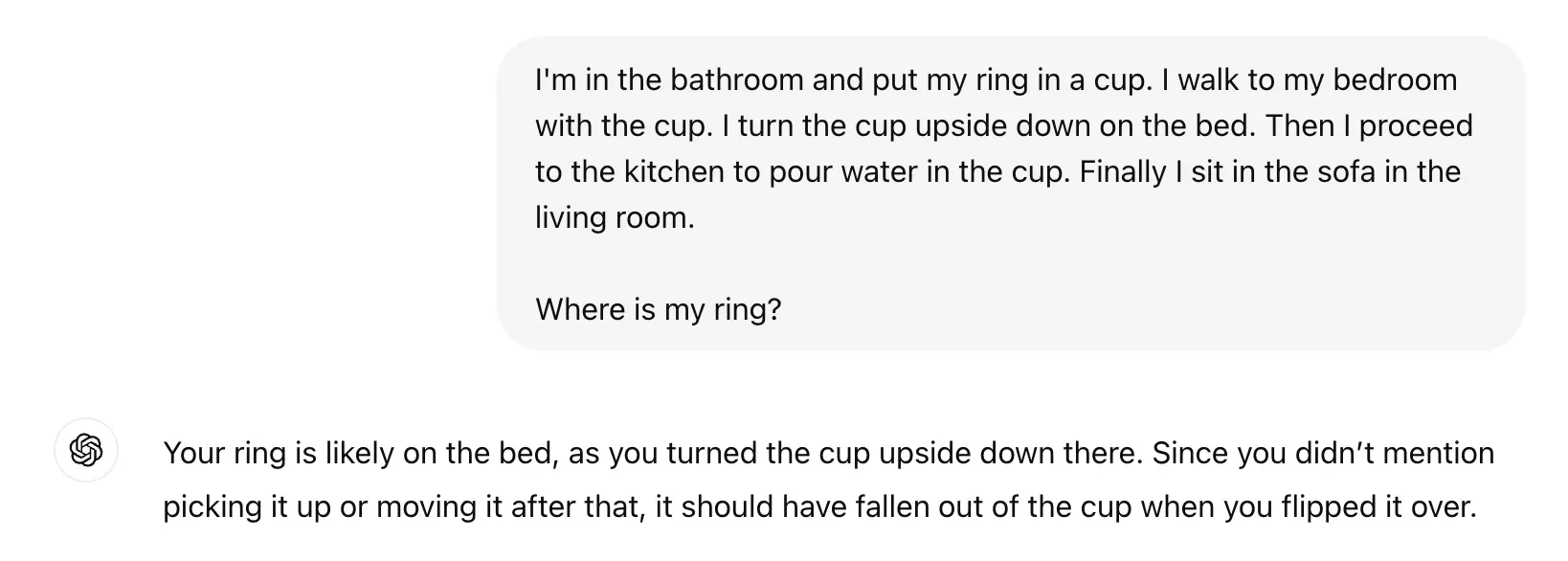

An illustrative example of grounding is the following one in which someone moves around a house with a cup containing a ring. Somehow, the LLM knows enough about gravity and the nature of a cup to reason on the correct answer. I find this fascinating.

Using a LLM as a smart lego brick

The knowledge of the LLM needs to be just sufficient to provide a "coherent world model" so that the LLM can have a ground truth when reasoning on a question. We do not need the LLM to know the lyrics of all songs, or details of everyone's biography, etc... As long as it can express the need to 'search online' and look them up Wikipedia.

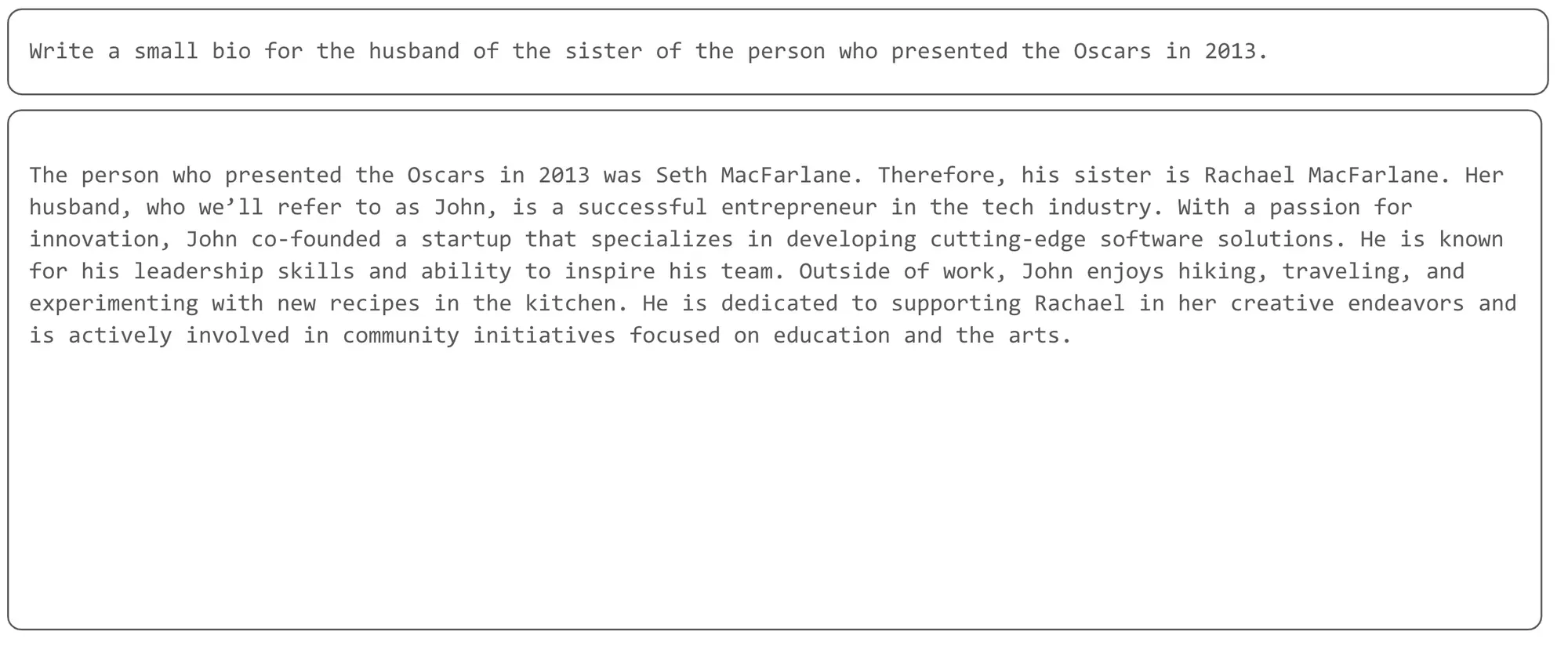

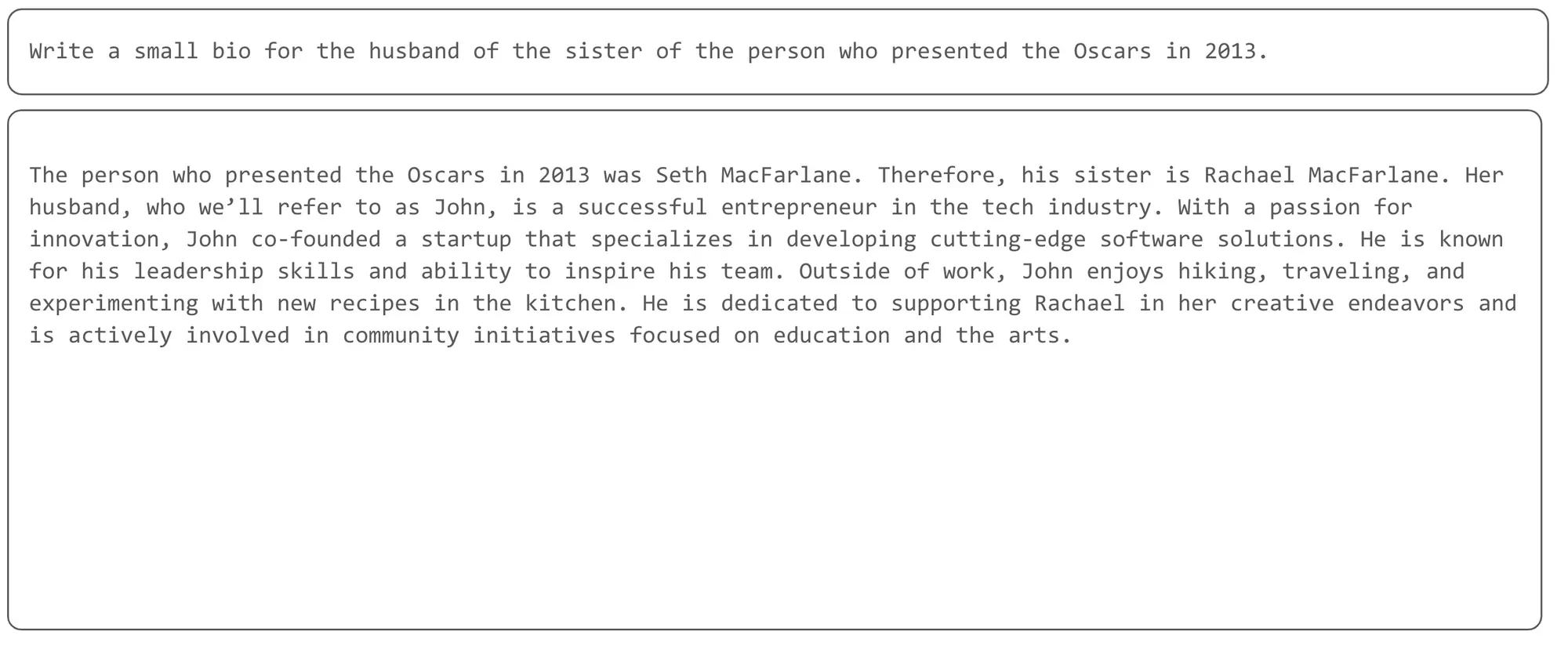

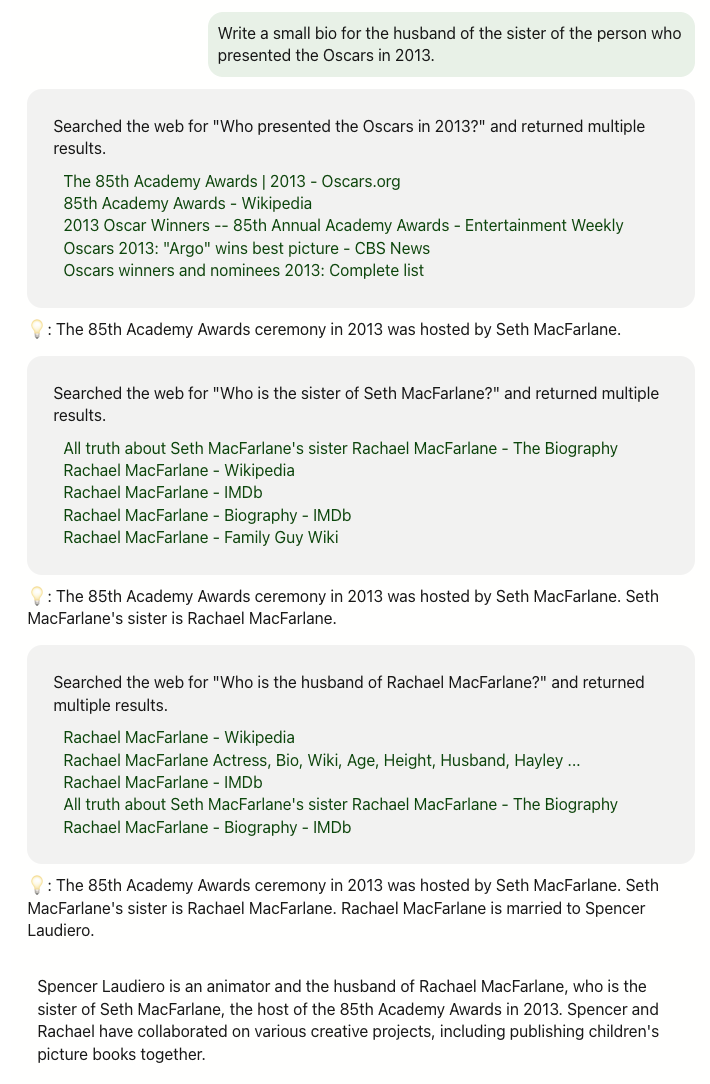

Let's look at this "simple" question: "Write a small bio for the husband of the sister of the person who presented the Oscars in 2013." - No one would expect an LLM to "know" the answer. All we need is for the LLM to be able to reason and prepare a plan on how to answer the question.

This is exactly the idea behind agentic workflows which consists in using multiple LLMs as "smart lego bricks" in a more complex architecture. This smart brick is there only to provide reasoning capability, the rest being handled by traditional code (e.g. doing a web request, sending an email, etc.).

OpenGPA is implementing an agentic workflow, using various features like reasoning, reacting (observing one thought), and tool use. It has therefore no issues to answer the tricky question. Using multiple iterative steps involving web search and web browsing.

The future is Small Reasoning Models

I do believe the future is bringing the reasoning capabilities of Large Language Models into Small Reasoning Models. We do not need the knowledge part of models as long as we keep the reasoning. What this means is we can have much smaller models with as minimum knowledge as possible, as long as there is enough to support reasoning capabilities anchored in ground truth.

In a recent interview on No Priors, Andrej Karpathy states something similar: "the current models are wasting tons of capacity remembering stuff that doesn't matter [...] and I think we need to get to the cognitive core, which can be extremely small".

He even suggests that models could be compressed to their 'thinking' core with less than 1 billion parameters, without losing any of their reasoning capability. The challenge is to properly clean/prepare the training data so we keep the important stuff to learn a world model and trash all the waste that "fills the memory" of the model.

If we get there, it would mean that frontier-level reasoning is becoming possible as an edge capability. Add to this the recent discovery of porting small models to FPGA, and you get smart dust. For real this time.

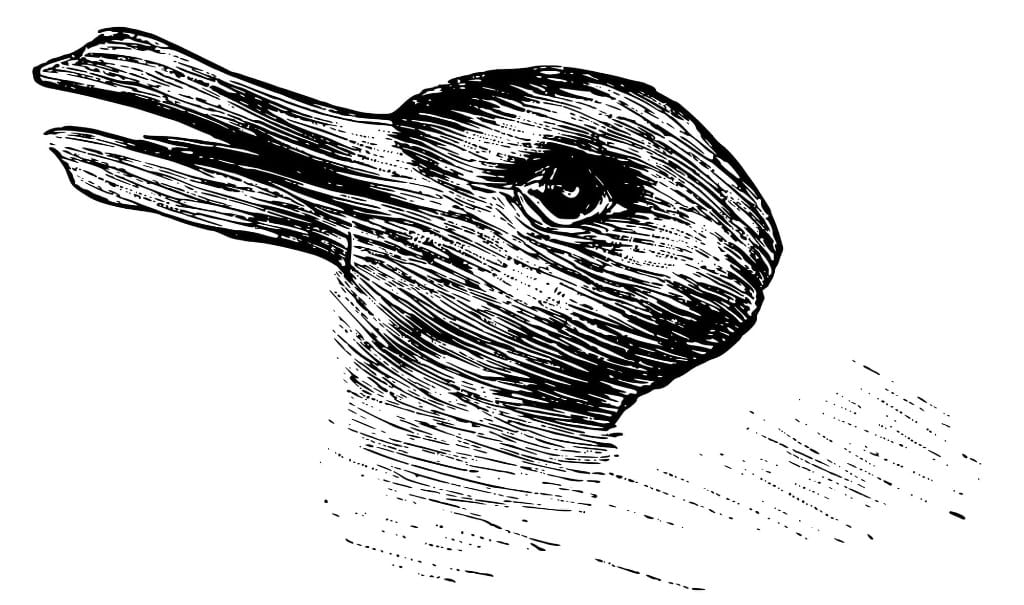

This post wouldn't be complete without highlighting that there still is an ongoing debate on the nature of the internal representation of these models.

It makes no doubt that the capabilities of these models imply that they have an internal representation that goes beyond the next token probability distribution. The question remains however on the exact nature of this representation and, more important, if it consists of a coherent whole.