v0.3 - Getting Serious!

OpenGPA v0.3 introduces enterprise-ready features for self-hosted AI agent workflows. This major update includes a new React/TypeScript UI, REST API integration, user management, and optimized performance with Qwen 2.5 models. It enables private AI automation with docker-based deployment.

For the new joiners: OpenGPA is an open-source platform for building and running AI agent workflows in a self-hosted environment. This release brings major improvements to enterprise readiness and usability.

TLDR;

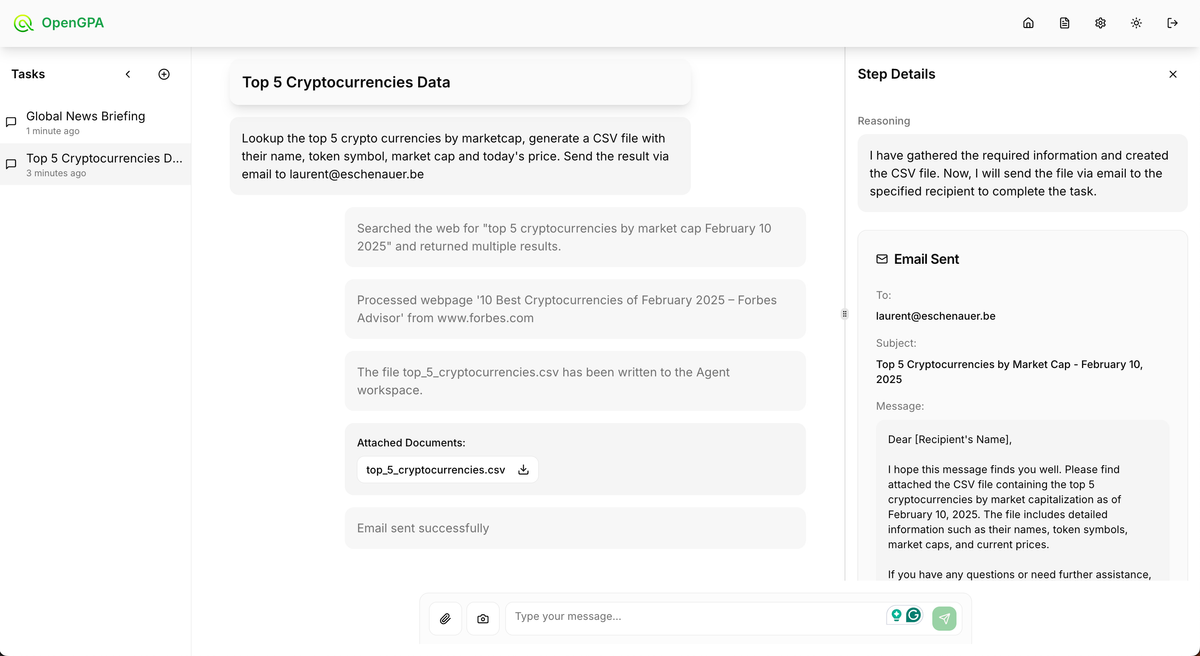

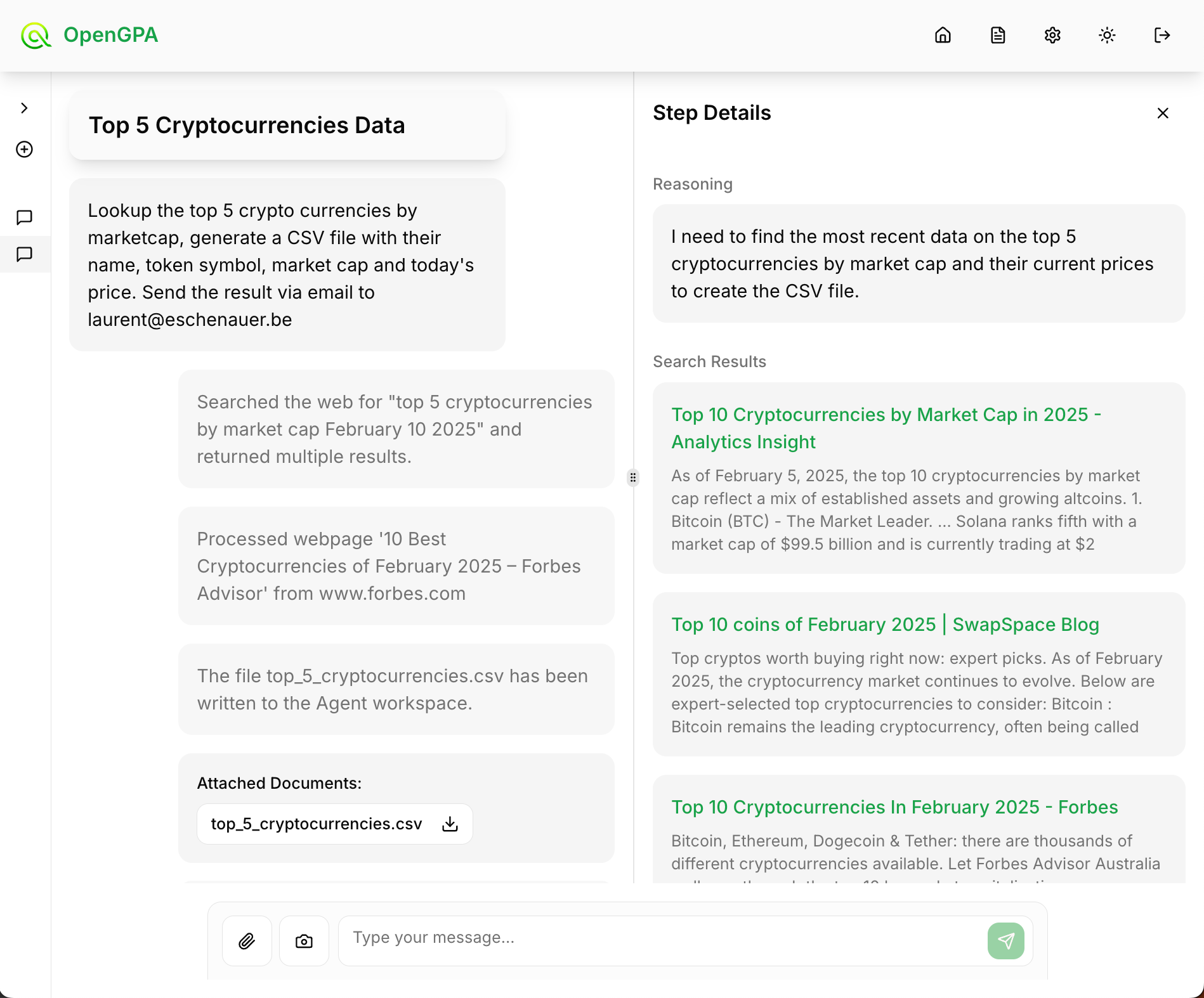

- A whole new User Interface that looks so much better than the previous one. Written in React/TypeScript with a modular approach.

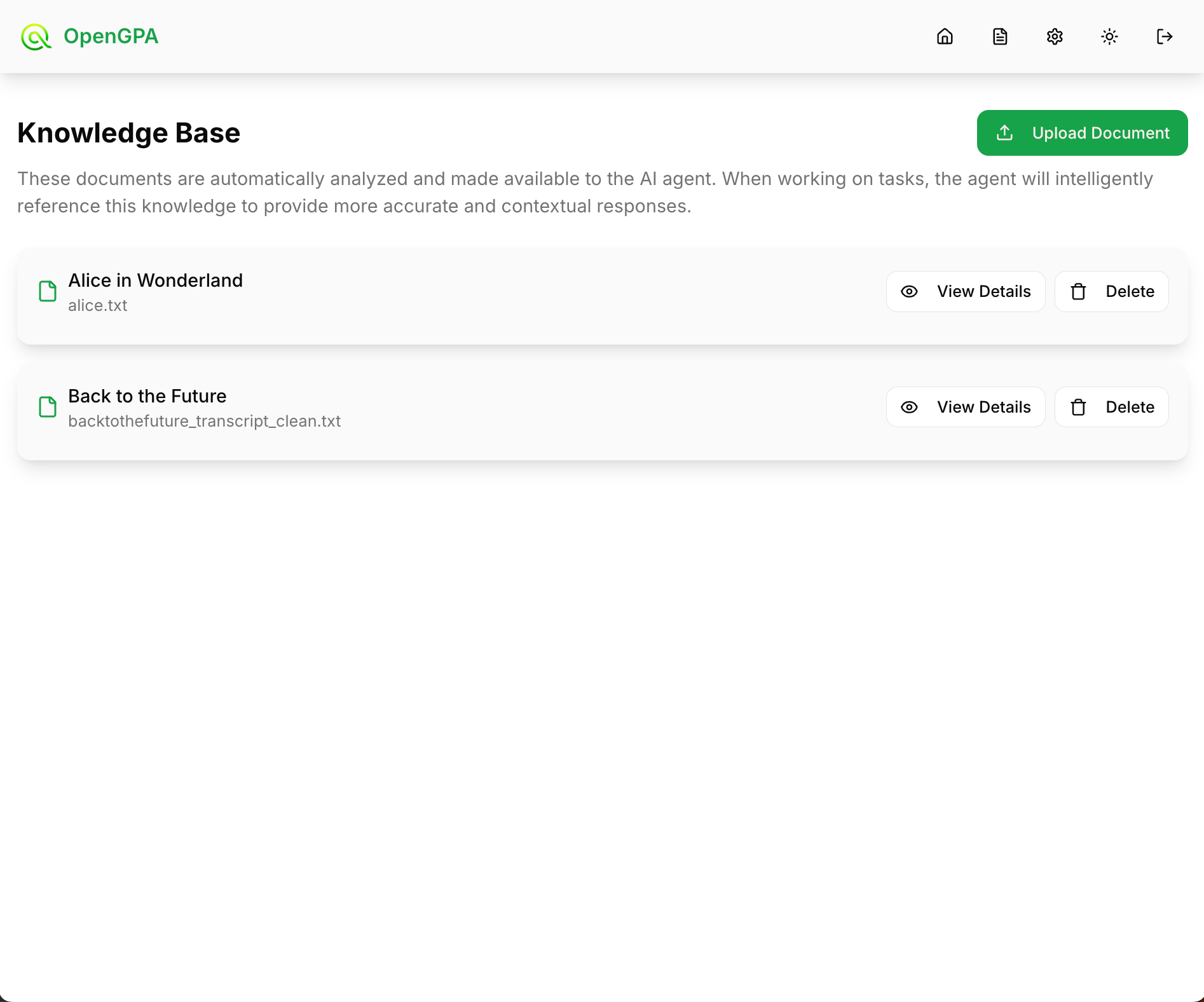

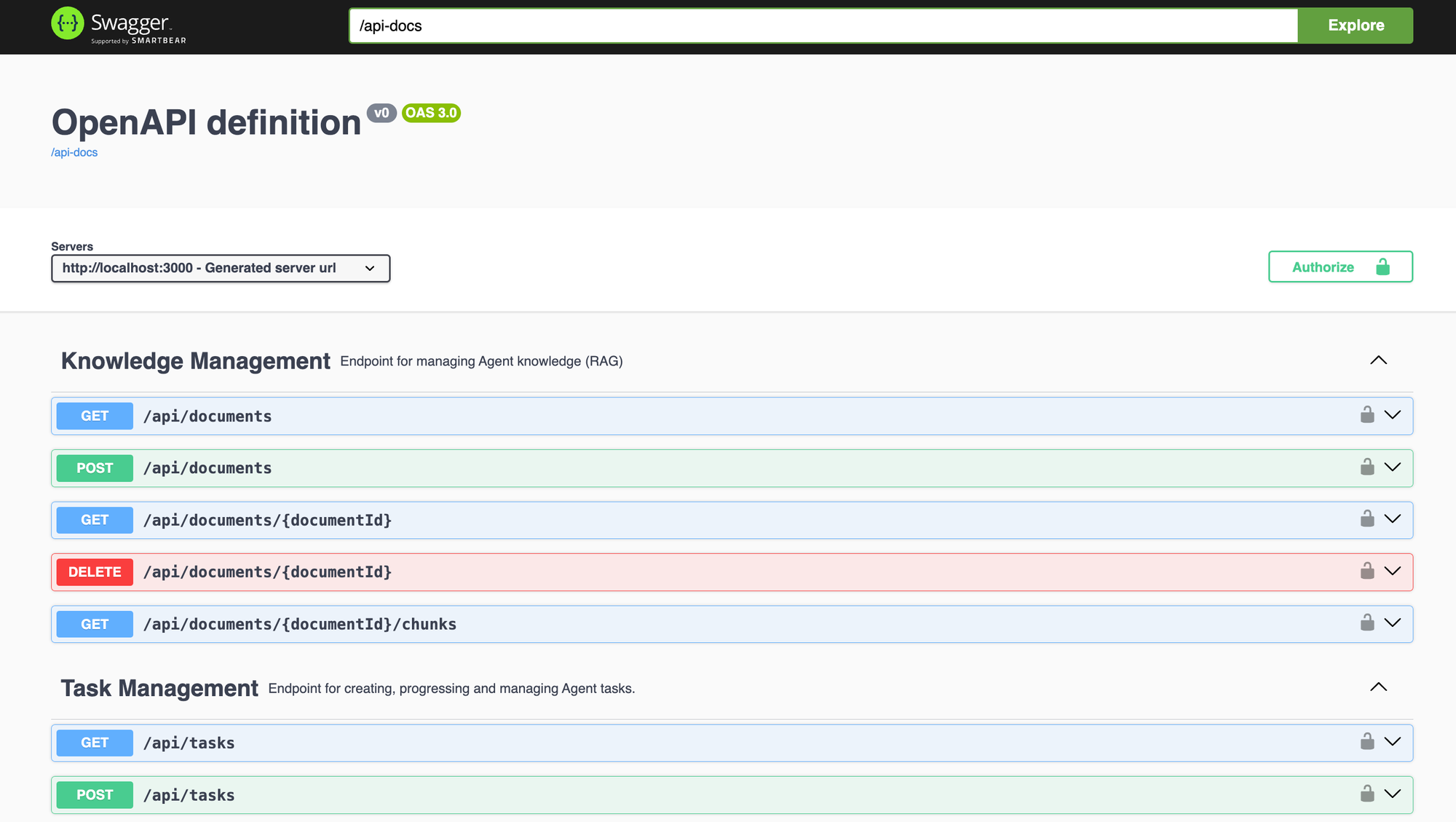

- A REST API that allows external systems to trigger/leverage Agentic workflows or push documents in the RAG repository.

- A set of optimizations to run nicely with the newest models such as Qwen 2.5 - making OpenGPA self-hosted fully functional on a machine as small as a g5.xlarge!

- Docker containerization to make it super easy to test/run/deploy

A new user interface

This one was built with a bit (actually a lot) of help from another AI Agent... lovable.dev 😅 - that also means that it is super easy for you to remix/update the UI to your own needs. Just hop and remix the project!

As always, the code is entirely open-source with a MIT license, making it super easy to customize and low risks to operate. Custom enterprise integration is available on demand.

REST API

The API was developed to support the new User Interface, however it can perfectly be used to support integration with external systems. It can either be systems calling Agentic functions directly (submitting/progressing tasks) or systems pushing documents to the RAG knowledge management engine. The API documentation is available on Github as well as an OpenAPI spec directly available on the backend server.

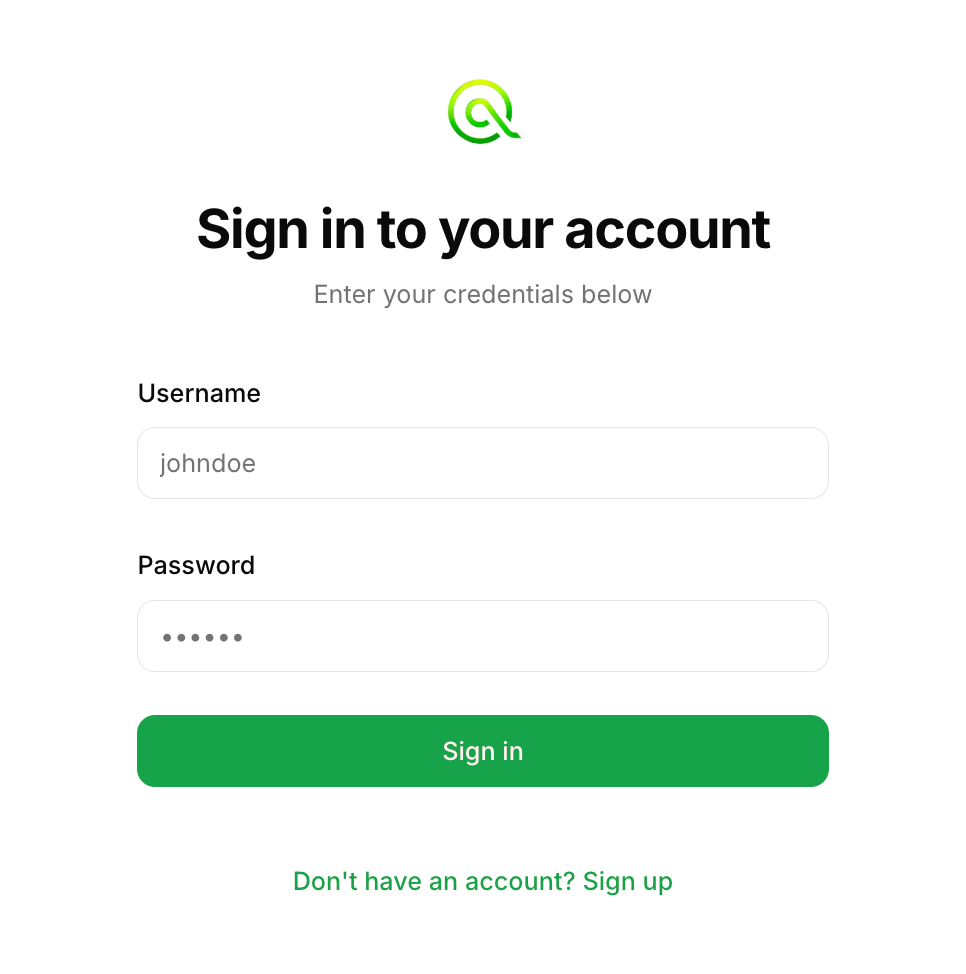

User Management

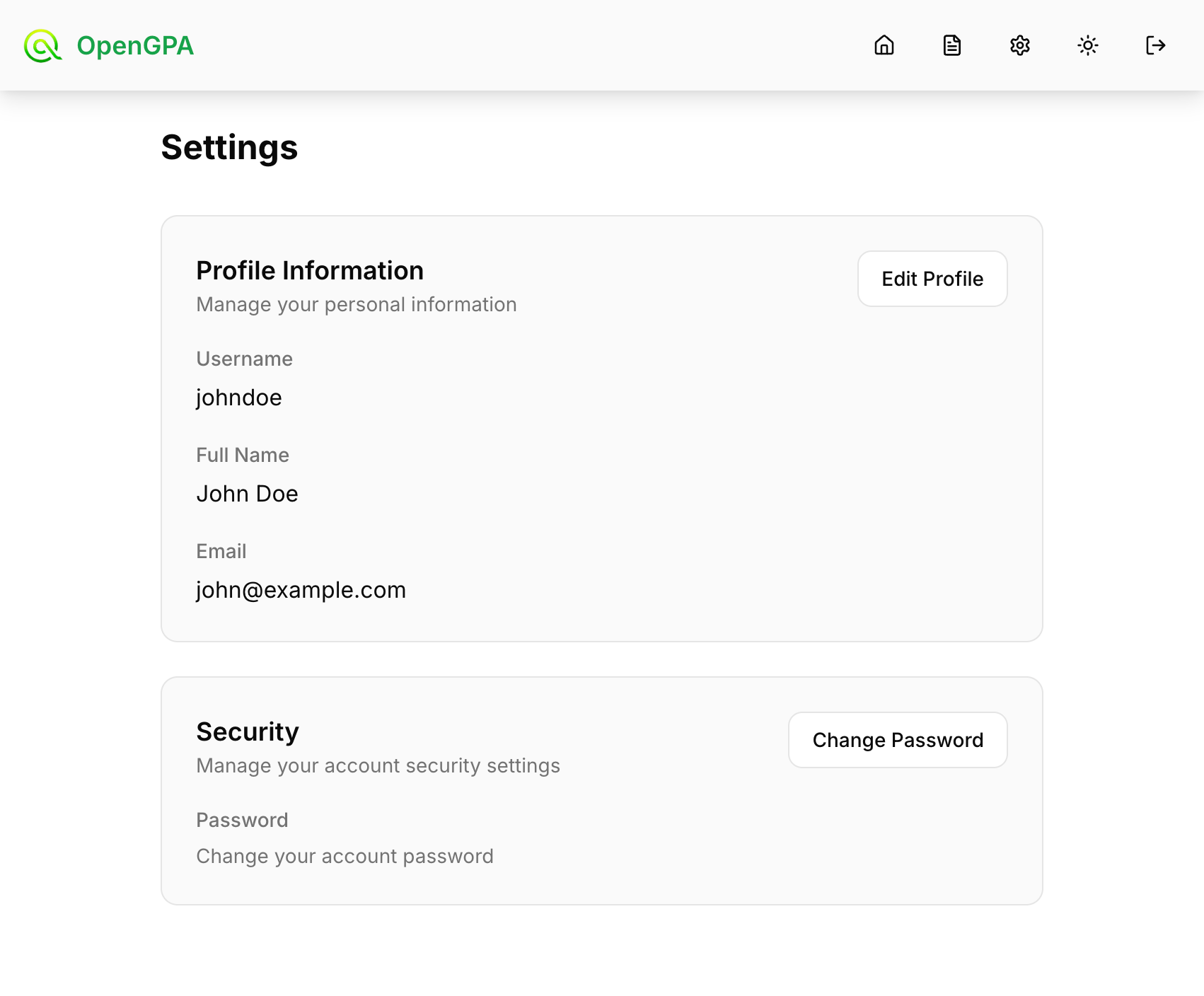

This was a major piece missing and blocking proper enterprise deployment. We now have proper user management with a signup flow. This behavior is configurable so you can keep your system open or closed. You can also enable an 'invite code' feature to limit who can create new accounts.

A new Settings screen has appeared to support self-service account management. It will keep evolving as I add auditing/accounting features to track usage and feature flags.

Self-hosting with Qwen!

This is a major development for anyone looking to run a powerful agentic solution entirely private and self-hosted. Thanks to Qwen 2.5b it becomes possible. I've tested on a g5.xlarge instance using qwen2.5:14b and it works superbly well. This means that agentic capabilities are now within reach of consumer GPUs with 24 GB of memory 🥳

I've written a complete tutorial on setting up such a system, including how to patch the default Ollama limitations to have a longer context window which is required for the large prompts of an agentic action model.

Easy setup with docker

Last but not least, I made it much easier for anyone to have a quick go at testing OpenGPA without having to clone/compile/configure. As long as you have Docker installed, the following instructions should get you a local setup to try out, using OpenAI gpt-4o as the llm.

curl -O https://raw.githubusercontent.com/eschnou/openGPA/main/docker-compose.quickstart.yml

echo "OPENAI_API_KEY=your-key-here" > .env

docker compose -f docker-compose.quickstart.yml up -dHelp needed!

This latest progress make OpenGPA now much more capable and ready to support real enterprise automation use cases. Any help in testing this locally, and running your own workflow/actions is much appreciated. I'd love to read your feedback and ideas of improvements.

I'm available to explore together custom enterprise integration to facilitate your genAI deployment and workflow integrations.